At its heart, Heckenlights relies on MIDI and Live Video Streaming technologies to bring the live experience to website visitors. Let me tell you today how MIDI and Live Video Streaming is done at Heckenlights.

Light shows are usually arranged around a particular performance and controlled by humans at event time. Light show arrangements in this style are great because they allow much freedom and tons of channels to be controlled. Light operators can take control at any time.

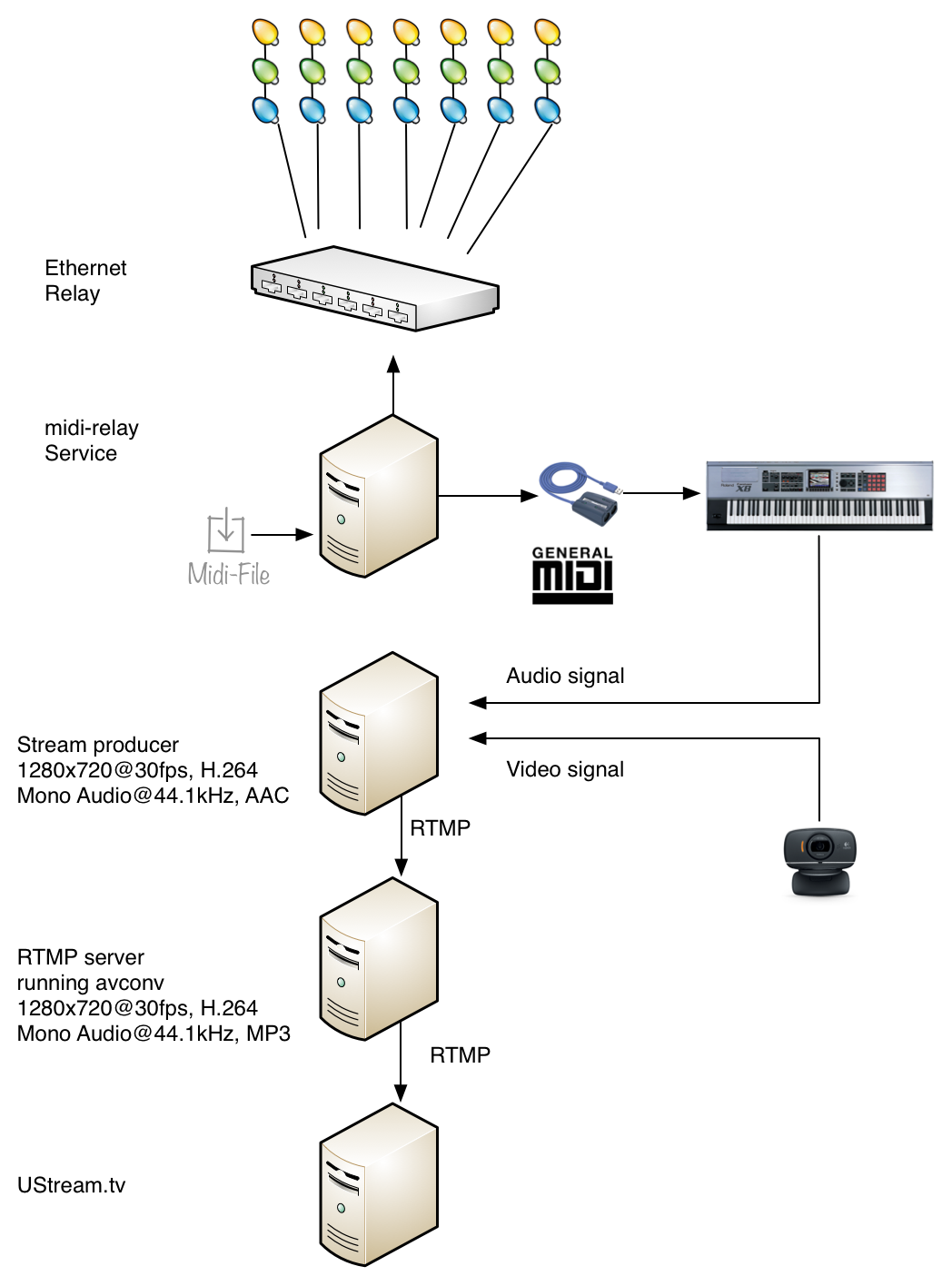

Heckenlights has no fixed program. The songs played on heckenlights.org are fully user controlled. There is no arrangement upfront. The key technology for Heckenlights is MIDI. Users can upload music as MIDI files to heckenlights.org and watch and hear them play. The MIDI protocol represents music data in an easy to understand style. The relevant parts express whether a music note is on or off (There are many more MIDI messages but let’S go with on/off for now). The note state is translated to a light on/off state using an ethernet-bound relay controlled by the midi-relay software.

The controller software uses a MIDI file as input and the MIDI sequencer plays („sequences“) the track. Playing MIDI using Java is great because the API allows to register as Receiver to receive all the MIDI messages. It’s then up to the receiver what it does with the data. In my case, it’s translating the particular notes to signals for the relay. This makes the lights dance to the rhythm of the music.

Receiver receiver = Receiver receiver = new Receiver()

public void send(MidiMessage mm, long timeStamp)

System.out.println("MidiMessage: " + mm);

}

}

Sequencer s = MidiSystem.getSequencer(false);

s.getTransmitter().setReceiver(receiver);

s.open();

s.setSequence(MidiSystem.getSequence(new URL("http://www.bluegrassbanjo.org/buffgals.mid")));

s.start(); // The playback runs on a different thread.

Dancing lights without the music are only half the fun. Heckenlights.org provides therefore an audio/video live stream. Let’s take a look at the audio part itself. MIDI can be used to not only control Christmas lights (hehe), but it’s designed to control music instruments in a way to produce music. Most computers working with MIDI have a built-in software synthesizer and that was my last years’ audio source. Now, the internal software synthesizer is limited in audio quality. If you listen to a built-in software synthesizer versus a hardware instrument, then you quickly recognize the differences. Software synthesizers require a fair bit of CPU time to produce the sound.

Dancing lights without the music are only half the fun. Heckenlights.org provides therefore an audio/video live stream. Let’s take a look at the audio part itself. MIDI can be used to not only control Christmas lights (hehe), but it’s designed to control music instruments in a way to produce music. Most computers working with MIDI have a built-in software synthesizer and that was my last years’ audio source. Now, the internal software synthesizer is limited in audio quality. If you listen to a built-in software synthesizer versus a hardware instrument, then you quickly recognize the differences. Software synthesizers require a fair bit of CPU time to produce the sound.

My controller machine is „just“ a Mac Mini, which also runs other services like the live stream transcoder, so it’s quite busy with other tasks. This year I decided to use an external MIDI interface since my basement is full of synthesizers. This year the audio stream comes from a Roland Fantom X8 that is connected using an USB MIDI interface to the controller machine.

The midi-relay software controls the relays and the MIDI signals to the hardware synthesizer. By using the Java MIDI API MIDI signals can be re-routed in real time. One data source, multiple usages. Audio from the MIDI tracks is played outside my yard and is fed into the video live stream.

Standing in front of my house and watching the lights dance is great but that’s just half the fun. Heckenlights.org wow-effect would not be able without a live stream.

Video is captured by a simple Web-Cam (Logitech C525 HD) on a tripod. The audio part is produced by the hardware synthesizer mentioned earlier. Both streams are captured and muxed with a streaming producer software. Afterwards the stream is pushed to an RTMP server. The producer software itself runs 24h because it’s a GUI software. It requires a stable network connection, otherwise it just stops streaming. No one’s there to start/stop the stream outside the broadcasting timeframe. Therefore the first stream is buffered on the RTMP server. Now comes another piece into play. I used last year avconv, the fork of ffmpeg for streaming and encoding. The encoding eats up a lot of CPU. Sometimes the image stuttered because of overload. Today the avconv process „just“ copies the stream from the RTMP server to UStream.tv. The simple stream copy does not stress the CPU and avconv has an excellent command-line interface so it’s very simple to start & kill the process.

Combining the MIDI control and feeding back the synthesized audio into the stream creates the live experience at http://heckenlights.org. Buffering on stream-producer and ustream.tv levels introduces a 10 to 20 seconds delay but having the live stream on the website is really amazing. It gives every website visitor the possibility to play with Heckenlights and see what’s happening.

In general, live streaming is an advanced topic and there are a lot of commercial solutions. It’s possible to set up live streaming fully with open source software but you have to dig yourself into the topic.